Use the DORA key metrics to measure software delivery performance

Gain an indication of how the performance of your software delivery can lead to the outcomes your business needs

This year marked the 10th anniversary of the DORA research report (https://dora.dev/research/2024/). DORA, which stands for the "DevOps Research and Assessment" group, has been instrumental in understanding and advancing DevOps practices in software development and IT operations.

DORA was founded by Dr. Nicole Forsgren, Jez Humble, and Gene Kim, who began research in the early 2010s with the goal of understanding what makes high-performing technology organizations successful.

Since 2014, DORA has been publishing an annual report, based on a survey of thousands of IT professionals worldwide. DORA was acquired by Google in 2018, and the research has continued, incorporating research conducted with, and on, Google’s army of engineers.

Four Key Metrics

The annual survey, which I am still digesting this year (at 120 pages it’s too long to simply chuck at an LLM for summarisation), looks at trends around adoption of technologies and practices that contribute to effective Software Delivery.

Having run the survey for such a long time and accompanied it additional research has allowed them to identify four key metrics of software delivery performance:

Deployment Frequency

Lead Time for Changes

Failed Deployment Recovery Time

Change Failure Rate

These metrics have become a common way of measuring the effectiveness of software development and delivery teams. They offer a view of how quickly and safely software can be built and delivered to customers:

Deployment Frequency - how often application changes are deployed to production. Higher deployment frequency indicates a more agile and responsive delivery process.

Lead Time for Changes - the time it takes for a code commit or change to be successfully deployed to production. It reflects the efficiency of your delivery pipeline.

Failed deployment recovery time - originally Mean Time to Recover - the time it takes to recover from a failed deployment. A lower recovery time indicates a more resilient and responsive system.

Change Failure Rate - the percentage of deployments that cause failures in production, requiring hotfixes or rollbacks. A lower change failure rate indicates a more reliable delivery process.

The DORA offering includes a quick check that let’s you benchmark your performance in these four measures against their global benchmarks https://dora.dev/quickcheck/.

Depending on your organisation, this exercise might be somewhat depressing! It might also be a catalyst for a “how might we…?” conversation with decision makers and technical people, and become aspirational.

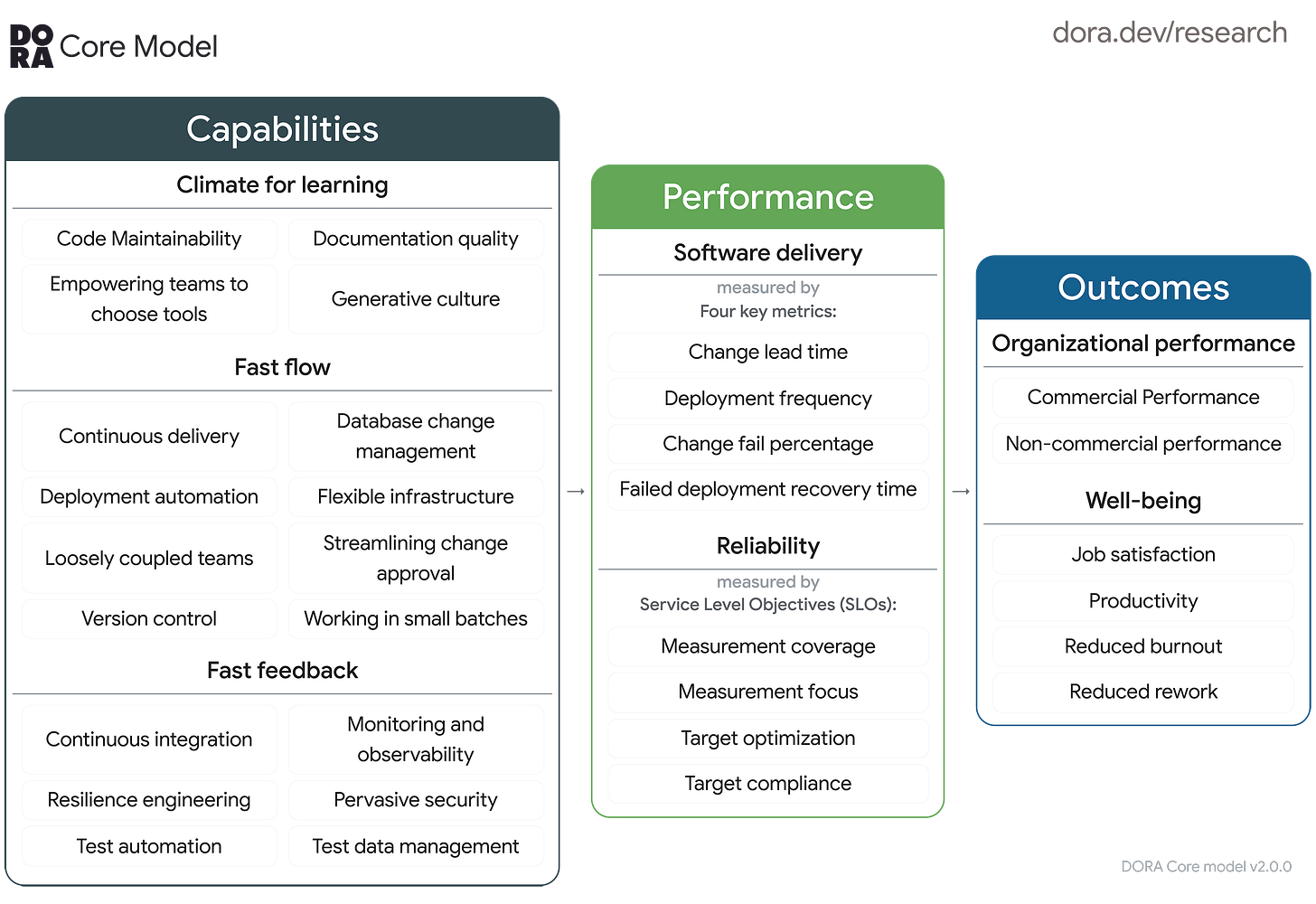

The Core Model - context for the Four Key Metrics

As with any set of “standard” measures, it’s well worth considering the context in which these metrics exist. DORA elevated the four key metrics out of their broader analysis of what their research revealed, which is about the interdependence of a range of organisational factors and dynamics. Afterall, software delivery is an aspect of the socio-technical system that is a modern business, not an isolated end in itself.

So the answers to how might we reduce change lead time are likely to be found in a number areas, some of which exist in a technology departments sphere of control, such as Version Control, but many of which reflect the broader culture of an organisation, such as the Generative Culture of a learning organisation.

The context also considers the why? DORA’s research provides a path from some potentially arcane discussions about branching strategy, Infrastructure as a Service and continuous integration to the metrics that business leaders ultimately care about such as revenue and customer satisfaction.

DORA is a fantastic resource https://dora.dev/research/?view=detail, and it is well worth diving into detailed view to further understand the inter-relationship of these different aspects of culture and technology.

Practical application of the DORA key metrics

There are no shortage of things to measure in a business, so why would you choose DORA metrics?

My first experience of applying DORA metrics was in a telco environment, with a fair split between “dev” and “ops”.

The appetite to try it out was surprisingly high, which I attribute largely due to the simplicity of the measures and being able to compare them to global benchmarks. One of the characteristics of the organisation at the time was a lack of shared language and data around software delivery performance, alongside the inevitable tension between teams how often viewed speed and quality as opposites, rather than complementary.

The actual application of the measures was a bit more difficult than gaining agreement to use them. There are several challenges with the adoption of a measure:

the definition - fortunately DORA has this one covered… mostly

the calculation - beautifully simple, very easy to apply in whatever tool you choose. There’s even a GitHub repo (https://github.com/dora-team/fourkeys) with python implementation. (The repo isn’t being maintained any more, but the core logic is solid)

sourcing the data - the tricky part. The challenge we found was that the data was distributed across numerous systems. For application software teams most development work was managed in Jira, code in Bitbucket, change was tracked in an ITIL (Ivanti Heat). But not everything was. Some development was outsourced, using different systems. And some code was still managed in an svn code repo. And then there were the network teams, and teams working on operational systems.

Alternatives to DORA

The point of DORA is to give you an indication of the health of your overall system. There is a lot of research to suggest that DORA four keys are a reasonable model.

But there are alternatives. Flow metrics are another way to judge your system. There are overlaps with Flow with the change lead time and deployment frequency giving a view of your pace. Flow is predicated on the assumption that speed is king, which, when coupled with a good mix of types of change, is a very strong hypothesis for predicting successful software teams.

Other metrics are available, also. There isn’t a correct answer to the question of which metrics should I use to measure the performance of software delivery. Plenty of incorrect answers, but that’s what LinkedIn is for.

Pitfalls of DORA

The main pitfall with implementing DORA is that believing it is sufficient. Even if you are a development manager relying solely on DORA metrics is not sufficient. The four key metrics were identified as being the key indicators of whether a software development organisation is “high performing”.

Many, if not most, of your stakeholders will care little about the DORA metrics. They are focused on what comes after - the outcomes. Revenue. Customer Satisfaction. Team well-being. Trying to convince any executive of the merits of DORA in isolation is simply not worth the effort.

You also need to consider your context, particularly if you use the global benchmarks. At one organisation I worked at we managed to improve change lead time by about 400%, but from a global benchmark perspective we were way off the upper quartile.

But was the massive improvement worth the effort? Absolutely. And the benchmarks, coupled with our success in attaining measurable improvement, gave us the encouragement needed for the next push.